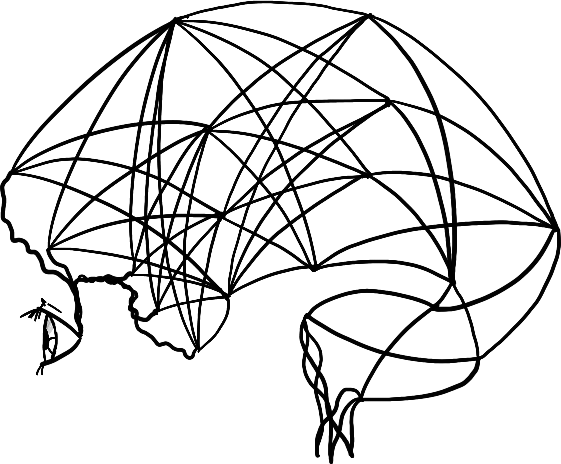

Making a name graph from their popularity over time

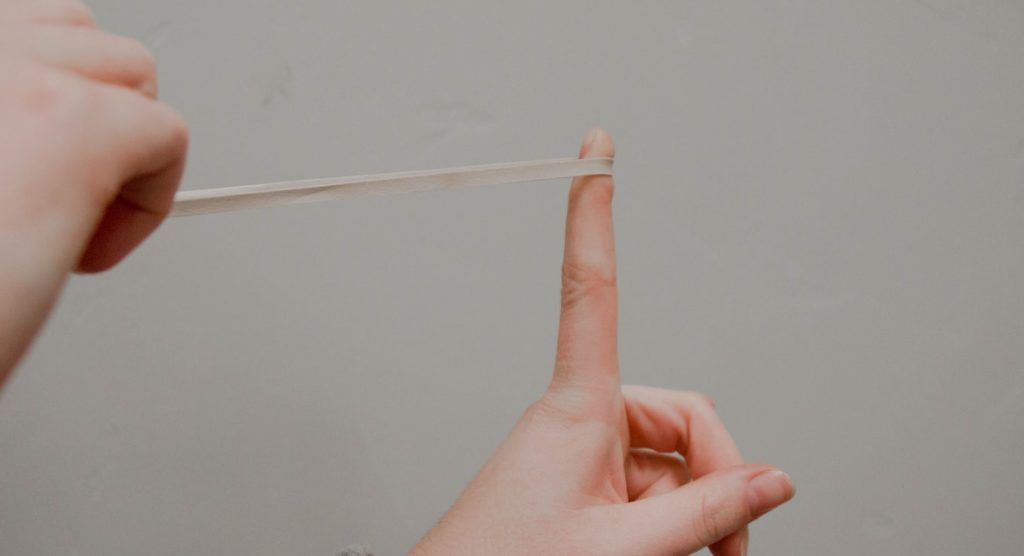

Using Machine Learning to Compromise between names

The effect of Dataset Poisoning on Model Accuracy

Step by step recurrent neural network inference with Keras

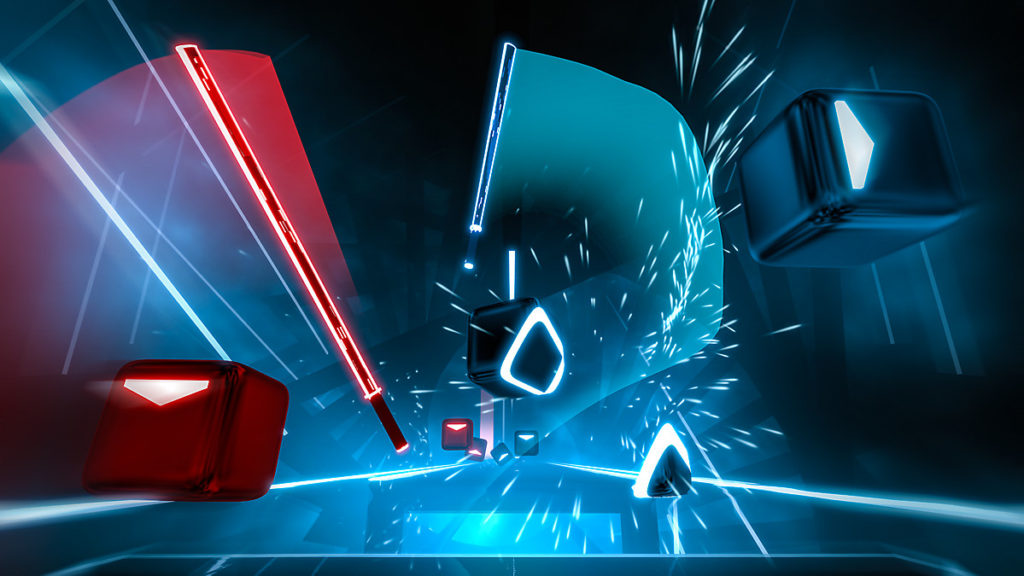

make beat saber maps using machine learning (1)

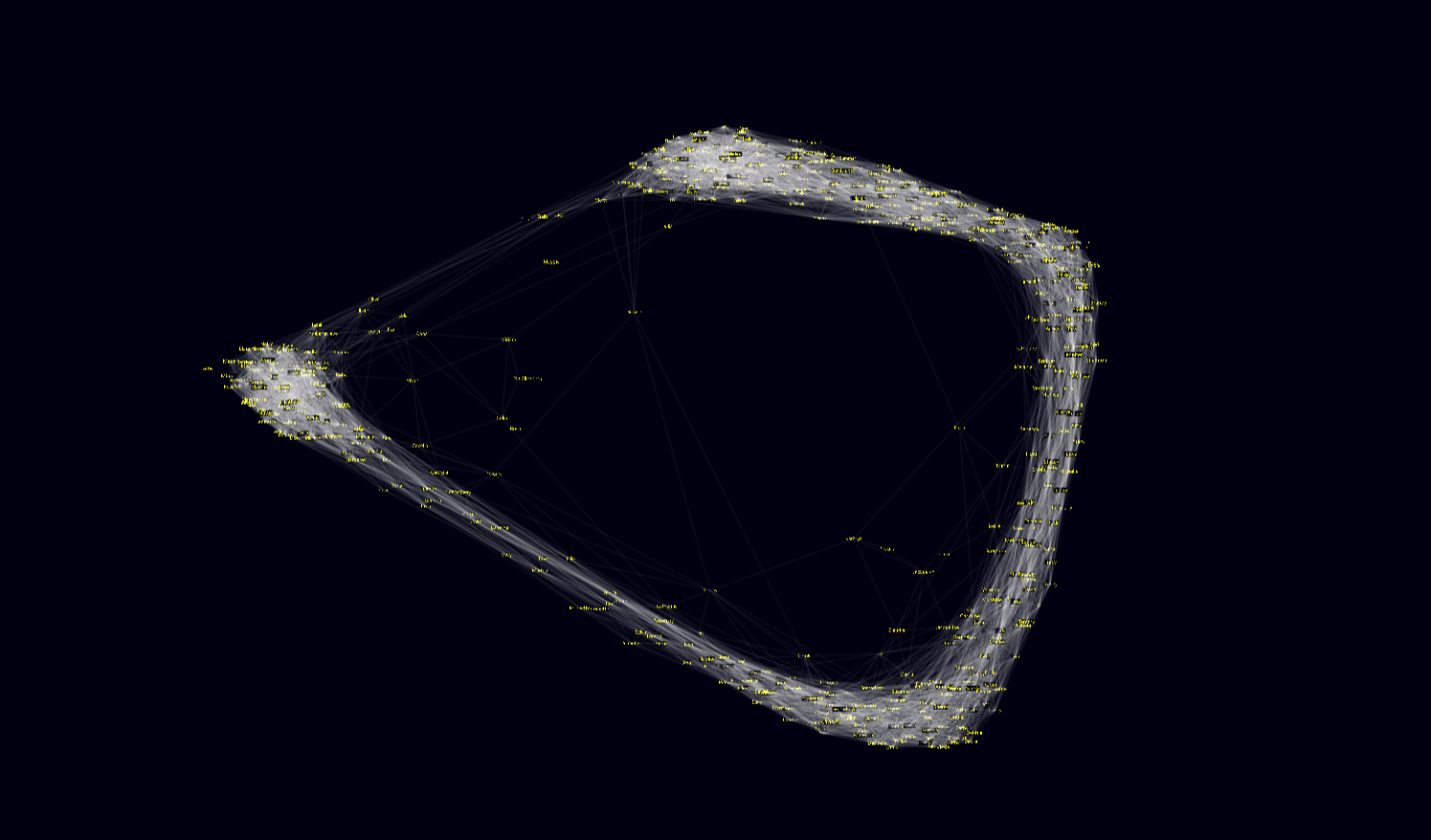

Plotting tool for FSEconomy

Reduce MNIST dimensionality using autoencoders

How not to reduce dimensionality for clustering

How to implement the K-means algorithm in Tensorflow

Creating a custom training loop in tensorflow

on the flexibility of dense layers in Keras